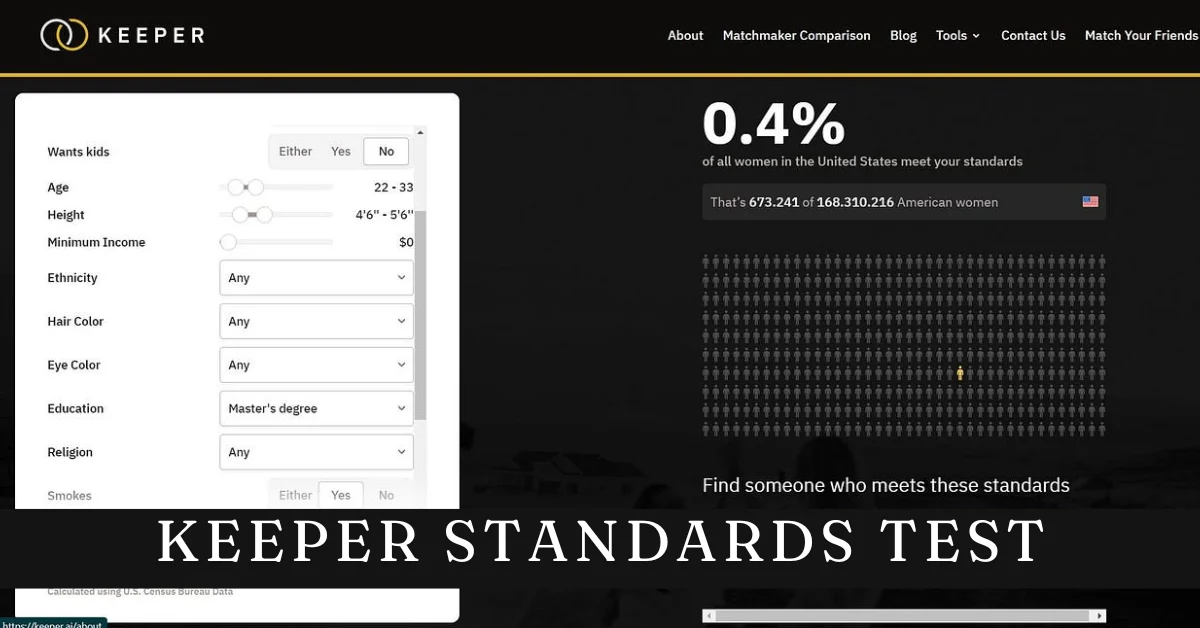

Introduction to Keeper Standards Test

Artificial Intelligence is rapidly transforming our world, offering innovations that were once the stuff of science fiction. However, with great power comes great responsibility. As AI systems become more integrated into our daily lives, it’s crucial to ensure they operate reliably and ethically. Enter the Keeper Standards Test—a benchmark designed to evaluate these very qualities in AI technologies.

This test serves as a litmus for developers and companies alike, guiding them toward responsible AI development. But what exactly does this entail? And why should we care? Let’s dive deeper into the significance of assessing AI through this critical framework.

Understanding the importance of evaluating AI reliability and ethics

As artificial intelligence becomes more integral to our daily lives, the need for reliability and ethics in AI systems grows. Trust is essential. We rely on these technologies for everything from healthcare to finance.

Evaluating AI reliability ensures that systems perform as intended. It minimizes errors that could lead to significant consequences. When we know an AI system can be trusted, it fosters greater acceptance among users.

Ethics also plays a crucial role in this landscape. Decisions made by AI should reflect fairness and accountability. If algorithms are biased or opaque, they can perpetuate injustice rather than promote equity.

Balancing innovation with responsibility is key. As companies push boundaries in technology development, ethical considerations must guide their journey. This thorough evaluation will pave the way towards a future where AI improves lives without compromising values or safety.

Components of the Keeper Standards Test

The Keeper Standards Test is a structured framework designed to evaluate the reliability and ethics of artificial intelligence systems. It consists of several key components that assess various aspects of AI behavior.

First, there’s the Reliability Assessment. This component examines how well an AI performs its intended tasks under different scenarios. Consistency is crucial here.

Next up is Ethical Compliance. This evaluates whether an AI adheres to established ethical guidelines, ensuring it operates fairly and transparently.

Another important aspect is Bias Detection. This part identifies any potential biases in data or algorithms that could lead to unfair treatment across different user demographics.

User Impact Analysis looks at how the AI affects end-users and society as a whole. Understanding these implications helps developers make informed decisions about their technology’s deployment and governance.

Examples of AI systems that have passed or failed the test

The Keeper Standards Test has become a benchmark for assessing AI systems. Some notable examples illustrate this point vividly.

IBM’s Watson Health is one such success story. It effectively navigated the test, demonstrating reliability in processing medical data and aiding healthcare professionals with informed decisions.

On the flip side, facial recognition technology from several companies has encountered significant hurdles. Many of these systems failed due to high error rates and bias against certain demographic groups, raising serious ethical concerns.

Another interesting case is Google’s DeepMind. It passed various components of the Keeper Standards Test by showcasing transparency and accountability in its algorithms.

These instances highlight not only what can be achieved but also what must change within AI development. They serve as critical reminders that trustworthiness isn’t just a checkbox—it’s essential for societal acceptance.

The impact of unreliable and unethical AI on society

Unreliable and unethical AI can have far-reaching consequences. When algorithms falter, they can lead to misguided decisions in critical areas like healthcare or criminal justice. The stakes are high when lives depend on accurate data.

Moreover, biased AI systems perpetuate existing inequalities. They often reflect the prejudices inherent in their training data, leading to unfair treatment of marginalized groups. This creates a cycle of discrimination that is hard to break.

Trust erodes as public awareness grows about these issues. People may become wary of technology designed to improve their lives. This skepticism hampers innovation and slows progress toward beneficial applications.

Inadvertently, unreliable AI fosters a climate of misinformation. Automated systems can spread false narratives rapidly, influencing public opinion and shaping societal norms without accountability.

The ramifications extend beyond individual experiences; they impact democracy itself by skewing perceptions and undermining informed decision-making across communities.

How companies can implement the Keeper Standards Test in their AI development process?

To implement the Keeper Standards Test, companies should first establish a clear framework. This framework needs to define what reliability and ethics mean for their specific AI applications.

Next, they can create internal guidelines that align with the test’s components. These should cover data integrity, bias mitigation, and transparency in algorithms.

Regular training sessions for developers are crucial. Educating teams about ethical considerations ensures they remain vigilant throughout the development process.

Conducting periodic audits is another vital step. Analyzing AI systems against Keeper Standards will help identify potential weaknesses early on.

Engaging with stakeholders—including users—can provide valuable insights into real-world impacts of their technologies. Gathering feedback fosters a culture of accountability and continuous improvement within organizations.

Future considerations for AI development and testing

As technology evolves, the landscape of AI development will change dramatically. Future considerations must include not only enhanced algorithms but also more robust ethical frameworks.

Integrating diverse perspectives in AI design can prevent biases from creeping into systems. This means involving ethicists, sociologists, and community representatives right from the start.

Transparency will become increasingly vital. Users deserve to know how decisions are made and what data drives those outcomes. Clear communication fosters trust between developers and users.

Moreover, adapting testing methods is crucial as AI applications grow in complexity. Simulations that mimic real-world scenarios could provide deeper insights into potential failures or ethical dilemmas.

Continuous education for developers regarding ethical standards and reliability measures should be prioritized to keep pace with rapid advancements in technology. Emphasizing these factors will help shape a responsible future for artificial intelligence.

Conclusion

The Keeper Standards Test emerges as a vital tool in the landscape of artificial intelligence. As AI technologies evolve rapidly, ensuring their reliability and ethical use becomes increasingly critical. This test serves not only to evaluate existing systems but also to shape future developments.

With components designed to scrutinize performance and adherence to ethical norms, the Keeper Standards Test provides a framework that can drive meaningful improvements in AI applications. Companies embracing this evaluation process will likely see enhancements in public trust and user satisfaction.

As we look ahead, integrating such standards into AI development will be paramount for societal well-being. The conversation around reliable and ethical AI is just beginning, but tools like the Keeper Standards Test are paving the way for more responsible innovation. Adopting these practices won’t just benefit businesses; it could redefine our relationship with technology itself.

ALSO READ: 127.0.0.1:62893 Insights: Understanding Localhost and Ports

FAQs

What is the “Keeper Standards Test”?

The Keeper Standards Test is a framework for evaluating the reliability and ethical behavior of AI systems, ensuring they operate safely and fairly.

Why are AI reliability and ethics important?

Reliable and ethical AI systems build trust, minimize errors, and prevent bias, which is crucial for applications in sensitive fields like healthcare and finance.

What components does the Keeper Standards Test assess?

The test assesses reliability, ethical compliance, bias detection, and user impact, ensuring AI systems meet essential safety and fairness standards.

Can you give examples of AI systems that have passed the test?

IBM’s Watson Health successfully passed the Keeper Standards Test, while several facial recognition systems failed due to bias and reliability issues.

How can companies implement the Keeper Standards Test?

Companies can establish a framework, create guidelines, conduct training, perform audits, and engage stakeholders to integrate the test into their AI development process.